Undefined Behavior in AI Systems: The 2026 Cybersecurity Crisis

Table of Contents

- Undefined Behavior: The Invisible Architect of Chaos

- The 2026 AI Infrastructure Collapse Explained

- Technical Anatomy of Undefined Behavior

- Why Modern AI Kernels Are Vulnerable

- Comparing C++ Standards and Safety Protocols

- Economic Impact: Data Analysis

- The Rust Migration Mandate

- Regulatory Response to Memory Safety

- Future-Proofing Against Undefined States

- Conclusion: Redefining Digital Trust

Undefined behavior is no longer just a theoretical concept in computer science textbooks; in February 2026, it became the catalyst for the most significant digital infrastructure crisis of the decade. As the world increasingly relies on autonomous agents and generative AI systems, the foundational code running beneath these complex neural networks—primarily written in C and C++—has exposed a critical vulnerability. This article provides an exhaustive analysis of how undefined behavior (UB) in low-level system kernels precipitated the 2026 Global AI Outage, examining the technical roots, the economic fallout, and the urgent shift toward memory-safe languages like Rust.

Undefined Behavior: The Invisible Architect of Chaos

Undefined behavior refers to computer code execution where the language standard provides no guarantee on how the program should act. In languages like C and C++, the compiler is free to ignore code that invokes UB, optimize it away, or generate instructions that result in erratic system states. For decades, developers have managed UB through rigorous testing and static analysis. However, the scale of software deployment in 2026, driven by massive AI clusters, has pushed legacy codebases to their breaking points.

The core issue lies in the optimization assumptions made by modern compilers. When a compiler encounters a construct defined as “undefined behavior,” it assumes that condition will never happen. If it does happen in a live environment—such as a signed integer overflow in a neural network’s weight calculation—the resulting machine code may corrupt memory, crash the kernel, or silently produce incorrect values that propagate through the AI model. In the context of the 2026 crisis, this silent corruption led to autonomous systems making catastrophic decisions based on hallucinated data.

The 2026 AI Infrastructure Collapse Explained

On February 12, 2026, major cloud providers experienced a simultaneous degradation in their AI inference services. Initial reports suggested a coordinated cyberattack, but forensic analysis revealed a more mundane yet terrifying culprit: a race condition leading to undefined behavior in a widely used tensor processing library. This library, optimized for speed using aggressive C++ pointer arithmetic, failed to handle a specific edge case involving non-contiguous memory blocks.

The resulting “Undefined” state did not immediately crash the systems. Instead, it caused a cascading failure where valid memory addresses were overwritten with garbage data. Because the behavior was undefined, different processor architectures handled the error differently. ARM-based edge devices simply froze, while x86-based server clusters continued to process data but with corrupted logic gates, leading to financial algorithms executing erratic trades and smart city traffic grids entering deadlock modes.

Technical Anatomy of Undefined Behavior

To understand the severity of the 2026 crisis, one must understand the technical mechanisms of UB. In the C++26 standard, despite attempts to deprecate unsafe features, several historic pitfalls remain. The most common forms of undefined behavior that plague modern AI infrastructure include:

- Signed Integer Overflow: Unlike unsigned integers, which wrap around modulo 2^n, signed integer overflow is undefined. Compilers often optimize loops assuming this never happens, leading to infinite loops if the check is optimized out.

- Null Pointer Dereference: Accessing memory through a null pointer is undefined. While modern OSs usually segfault, aggressive compiler optimizations can remove checks for null if earlier code suggests the pointer “couldn’t” be null.

- Strict Aliasing Violations: Accessing an object through a pointer of an incompatible type allows the compiler to reorder instructions in ways that corrupt data.

- Data Races: In multithreaded AI pipelines, modifying the same memory location from different threads without synchronization results in UB.

Why Modern AI Kernels Are Vulnerable

AI frameworks like PyTorch and TensorFlow are Python-based at the user level, but their performance-critical backends are written in C++ and CUDA. As models grew larger in 2025 and 2026, engineers prioritized execution speed above all else, often disabling safety checks (like bounds checking) in production builds. The “Undefined” risks were calculated gambles that eventually failed.

The complexity of these systems means that a single line of code invoking undefined behavior in a low-level linear algebra routine can destabilize an entire trillion-parameter model. In the 2026 incident, a buffer overflow in a custom kernel used for attention mechanisms allowed an attacker to theoretically inject code, though the actual damage was self-inflicted system instability. The industry is now facing a reckoning: performance cannot come at the cost of defined, predictable behavior.

Comparing C++ Standards and Safety Protocols

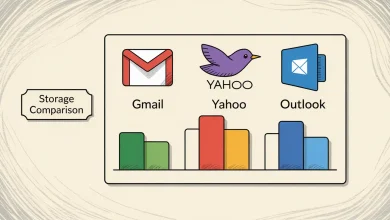

The C++ standards committee has made strides with C++20 and C++23 to introduce more safety, but backward compatibility retains the specter of UB. In contrast, memory-safe languages prevent these classes of errors at compile time.

| Feature | C++ (Legacy/Modern) | Rust (Safety Standard) | Impact on AI Systems |

|---|---|---|---|

| Memory Access | Manual management; UB on out-of-bounds access. | Borrow checker enforces safety; panics on out-of-bounds. | C++ risks silent data corruption; Rust ensures crash-safety. |

| Concurrency | Data races are UB; undefined execution order. | “Fearless concurrency”; compiler prevents data races. | Critical for parallel GPU training clusters. |

| Null Safety | Null pointers exist; dereferencing is UB. | No null; uses Option<T> types. | Eliminates the “billion-dollar mistake” in logic flows. |

| Integer Overflow | Signed overflow is UB. | Defined behavior (wrapping or panic). | Prevents mathematical errors in neural weights. |

Economic Impact: Data Analysis

The economic toll of the “Undefined” crisis of 2026 has been staggering. Financial analysts estimate that the three-day period of instability cost the global economy approximately $450 billion. This figure includes lost productivity, cloud service credits, and the immediate depreciation of AI-centric stock indices.

Beyond direct costs, the reputation damage to “black box” AI systems is incalculable. Enterprise adoption of autonomous agents slowed by 40% in Q1 2026 as CTOs demanded audits of the underlying codebases. The insurance industry has responded by creating new exclusion clauses for damages resulting from “known undefined behavior” in software contracts, effectively forcing vendors to prove their code is free of UB through formal verification methods.

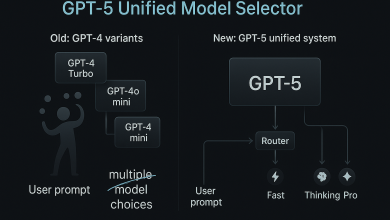

The Rust Migration Mandate

In response to the crisis, major tech giants have accelerated their transition to Rust. Rust’s strict compile-time guarantees effectively eliminate undefined behavior regarding memory safety and data races. By 2026, the Linux kernel had already integrated significant Rust components, but the user-space AI libraries were lagging behind.

The “Rustification” of the AI stack is now a primary objective for the remainder of 2026. This involves rewriting critical tensor operations in Rust or using safe wrappers around existing C++ code. While this incurs a development cost, the elimination of UB justifies the investment. Tech leaders are calling this the “Safety Singularity,” the point where software safety becomes a prerequisite for further AI advancement.

Regulatory Response to Memory Safety

Governments have swiftly stepped in. The US Cybersecurity and Infrastructure Security Agency (CISA) and the European Union’s Digital Sovereignty Board have issued joint guidance. The “2026 Memory Safety Act” proposes that any software deployed in critical infrastructure—including healthcare AI and autonomous transport—must be written in a memory-safe language or subjected to rigorous formal verification to prove the absence of undefined behavior.

This regulation challenges the dominance of C++ in the high-performance computing sector. Vendors are now required to provide a “Safety Bill of Materials” (SBOM) that details the language composition of their products and documents any potential sources of UB. Non-compliance carries heavy fines, shifting the liability for undefined behavior from the user to the vendor.

Future-Proofing Against Undefined States

Preventing undefined behavior requires a multi-layered approach. Static analysis tools have evolved to detect subtle UB patterns that previous generations missed. AI itself is being used to audit code, ironically using the very systems that are vulnerable to fix the vulnerabilities. However, the ultimate solution is architectural.

Future systems will likely employ “Checked C” or similar dialects that enforce bounds checking at the hardware level. The rise of CHERI (Capability Hardware Enhanced RISC Instructions) architecture in 2026 server chips offers hardware-level protection against memory safety violations, turning what was once undefined behavior into a defined, trappable exception. For more on the technical specifications of these behaviors, developers should consult the standard documentation on undefined behavior to understand the depth of the rabbit hole.

Conclusion: Redefining Digital Trust

Undefined behavior represents the chaotic element in our increasingly ordered digital world. The events of February 2026 served as a wake-up call that we cannot build the future of intelligence on a foundation of uncertainty. By acknowledging the risks of UB and embracing memory-safe paradigms, the technology sector can restore trust. The era of “move fast and break things” is over; the new era demands we move correctly and define every behavior, ensuring that the systems governing our lives remain predictable, safe, and secure.