Claude AI Service Disruption: Sonnet 4.6 Launch Triggers Outage

Table of Contents

Claude AI has been grappling with significant service disruptions throughout February 2026, culminating in a severe outage on February 18 that left millions of users unable to access key functionality. Just twenty-four hours after the highly anticipated release of the Claude Sonnet 4.6 model, reports of instability surged, highlighting the immense infrastructural challenges facing Anthropic as it scales to meet unprecedented enterprise demand. This latest incident, characterized by persistent “skills-related service errors” and API timeouts, underscores the fragility of the current generative AI ecosystem as model complexity outpaces hardware resilience.

The February 18 Service Disruption

On the morning of February 18, 2026, users across North America, Europe, and Asia Pacific began reporting an inability to access the Claude.ai chat interface and the Claude Desktop App. The disruption began at approximately 8:16 AM ET, coinciding with the peak start-of-workday traffic in the United States. Unlike previous outages which were often total blackouts, this event was marked by its specific technical failure: the “Intermittent error in skills-related functionality.”

According to the official status page at status.claude.com, the engineering team identified elevated error rates specifically affecting the new capabilities introduced in the Sonnet 4.6 update. Users attempting to upload new “Skills”—custom instruction sets designed to reduce non-determinism in AI responses—were met with 500 Internal Server Errors. For enterprise clients relying on these Skills for automated workflows, the platform became effectively unusable, even if the basic chat interface remained intermittently responsive for simple queries.

This incident is not an isolated event but part of a growing pattern of instability. Earlier in the month, on February 3, Claude Code experienced a similar blackout that left developers staring at error screens for nearly an hour. The recurrence of these issues suggests that Anthropic’s backend orchestration layer is struggling to manage the complex routing required by its newest, most powerful models.

The Sonnet 4.6 Launch Factor

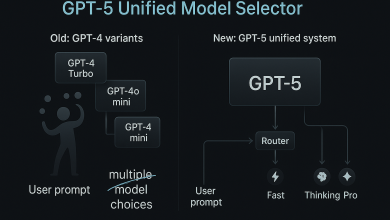

The timing of the outage is inextricably linked to the release of Claude Sonnet 4.6 on February 17, 2026. This new model was marketed as a “full upgrade” across coding, agent planning, and computer use, boasting a 1 million token context window in beta. Anthropic aggressively pushed this update, making Sonnet 4.6 the default model for all Free and Pro users immediately upon release.

The sudden shift of millions of active users to a more computationally intensive model likely created a “thundering herd” effect on Anthropic’s inference clusters. Sonnet 4.6 is designed to be more agentic, meaning it performs more internal reasoning steps and “computer use” actions per user prompt than its predecessor, Sonnet 3.5. This increases the inference compute load per second significantly. When millions of users simultaneously attempted to test the new coding and agentic features, the load balancers managing the “Skills” database—which stores user-defined tools and workflows—likely reached saturation.

Deciphering the ‘Skills-Related Service Error’

The specific error message displayed to users—”Intermittent errors in skills-related functionality”—reveals the architectural bottleneck of this outage. In the Claude ecosystem, “Skills” are not just text prompts; they are executable logic blocks that the model can call upon to perform reliable, repeatable tasks. This feature was introduced to solve the “context rot” problem, where overloading a chat with files degrades performance.

When this service fails, it breaks the core value proposition of Claude for business users. The error indicates a failure in the retrieval-augmented generation (RAG) pipeline or the vector database layer that serves these skills to the model context. Reports from developers on GitHub and Reddit confirmed that while standard text generation was possible, any request involving a custom tool, file analysis, or the new “Computer Use” API resulted in an immediate timeout. This points to a failure in the auxiliary services that support the heavy lifting of the Sonnet 4.6 architecture, rather than the core LLM inference engine itself.

Downdetector Data & User Impact

Data from Downdetector confirms the severity of the spike. At the peak of the disruption, reports exceeded 24,000 per minute, a figure comparable to major AWS regional outages. The geographic heat map showed a concentration of errors in tech hubs: San Francisco, New York, London, and Singapore, correlating with Anthropic’s heavy B2B user base.

| Metric | Claude AI Outage (Feb 18, 2026) | ChatGPT Outage (Feb 3, 2026) |

|---|---|---|

| Primary Trigger | Model Rollout (Sonnet 4.6) | Infrastructure Power Failure |

| Peak Error Count | ~24,000 reports/min | ~45,000 reports/min |

| Primary Symptom | Skills/Tool Use Failure (500 Error) | Total Login/Auth Failure |

| Resolution Time | Intermittent (Ongoing 6+ Hours) | Hard Down (4 Hours) |

| Affected Component | API & Agentic Tools | Web Interface & History |

Users on social media expressed frustration not just with the downtime, but with the degradation of the “smart” features they pay for. Pro users reported that even when the model worked, it defaulted to “dumber” behavior, ignoring instructions or hallucinating code libraries—a classic symptom of a system shedding load by reducing inference quality.

Comparative Analysis: Claude vs. ChatGPT

The February 18 outage draws immediate comparisons to the massive disruption earlier this month involving OpenAI. As detailed in our analysis of the February 3 ChatGPT outage, the AI industry is currently in a state of fragile expansion. However, the nature of these two events differs significantly.

The ChatGPT outage was a “hard down” event, likely caused by a lower-level infrastructure or power failure that severed access completely. In contrast, the Claude outage is a “brownout” caused by software complexity. The Sonnet 4.6 release introduced new layers of abstraction—specifically the Skills and Agentic coding features—that require complex database lookups before the model even generates a token. This complexity makes the system more prone to partial failures, where the chat works but the “brain” (the agentic capabilities) is lobotomized.

The Infrastructure Reality: GPUs and Series G

To understand why these outages are happening, one must look at the hardware reality. Anthropic recently closed a massive $30 billion Series G funding round on February 12, 2026, valuing the company at $380 billion. A significant portion of this capital is earmarked for compute infrastructure, specifically Nvidia’s Blackwell and Rubin GPUs.

However, money cannot instantly buy stability. As we discussed in our Nvidia stock analysis for Feb 2026, the supply chain for these advanced accelerators remains tight. Anthropic is likely running its new Sonnet 4.6 model on clusters that are pushed to their absolute thermal and logic limits. The “computer use” capability of Sonnet 4.6 requires the model to process visual data (screenshots) in real-time, a task that is orders of magnitude more computationally expensive than text processing. The outage suggests that the physical infrastructure available on AWS (Anthropic’s primary cloud partner) may not yet be sufficient to handle the global rollout of such a heavy multimodal workload.

Enterprise API and Developer Fallout

The most damaging aspect of this outage is its impact on the API ecosystem. Thousands of startups and enterprise tools now rely on Claude’s API for backend intelligence. The February 18 disruption caused a cascade of failures across the SaaS industry, as applications attempting to call `claude-3-5-sonnet` (and the new `claude-3-6-sonnet` alias) timed out.

For developers, this highlights the risks of building on proprietary models. Unlike open-source models that can be hosted on independent hardware, users of Claude are tethered to Anthropic’s uptime. This event may accelerate the trend toward hybrid AI strategies, where companies use Meta’s Llama models or other open weights as a fallback during centralized API outages. The reliability of the API is paramount; if Claude cannot guarantee 99.9% uptime, it risks losing the enterprise trust it gained with the superior coding performance of Opus 4.5.

Furthermore, the outage affects the burgeoning cloud gaming and streaming sectors that are beginning to integrate AI agents. Just as we have seen with Amazon Luna’s cloud infrastructure, the centralization of compute resources creates single points of failure. When the central “brain” goes offline, every connected service—from coding assistants to automated customer support agents—goes dark.

Future Stability Forecast

Looking ahead, the stabilization of the Claude platform will likely take several weeks. The engineering team at Anthropic must optimize the inference efficiency of Sonnet 4.6 to reduce the load per user. This may involve “quantization” (reducing the precision of the model slightly to speed up processing) or more aggressive rate limiting for Free tier users.

Users should expect intermittent instability to continue through the end of February 2026. The transition to agentic AI, where models don’t just talk but *do* things, inherently increases the complexity of the stack. Until the hardware supply catches up with the software ambition, outages like this will remain a recurring feature of the AI landscape. For now, checking the Anthropic Status Page remains the first line of defense for frustrated users trying to navigate the turbulence of the generative AI revolution.