YouTube outage 2026: Recommendation System Failure & Technical Analysis

Table of Contents

- Global Service Disruption: The February 2026 Blackout

- Technical Analysis: When Algorithms Break the Interface

- The “Ghost Town” UI and Feed Logic Errors

- Global Outage Statistics and Impact Data

- Debunking Solar Flare Interference Theories

- Agentic AI and Infrastructure Fragility

- Mobile Hardware Impact: iPhone 17 Performance

- Economic Ripple Effects for Creators and Advertisers

- Cloud Competitor Landscape: AWS vs. Google

- Communications Breakdown and Legacy Alternatives

- TeamYouTube Response and Recovery Timeline

- Future-Proofing Digital Video Infrastructure

YouTube outage reports flooded the internet on February 17 and 18, 2026, marking one of the most significant service disruptions in the platform’s recent history. Millions of users across the United States, India, the United Kingdom, and Australia were left staring at blank screens as the world’s largest video repository effectively went dark. Unlike traditional server failures characterized by 500 or 503 error codes, this incident manifested as a unique “content void,” where the platform loaded but refused to populate video feeds. This article provides an authoritative, technical deep dive into the causes, the scope of the disruption, and the implications for the future of AI-driven content delivery systems.

Global Service Disruption: The February 2026 Blackout

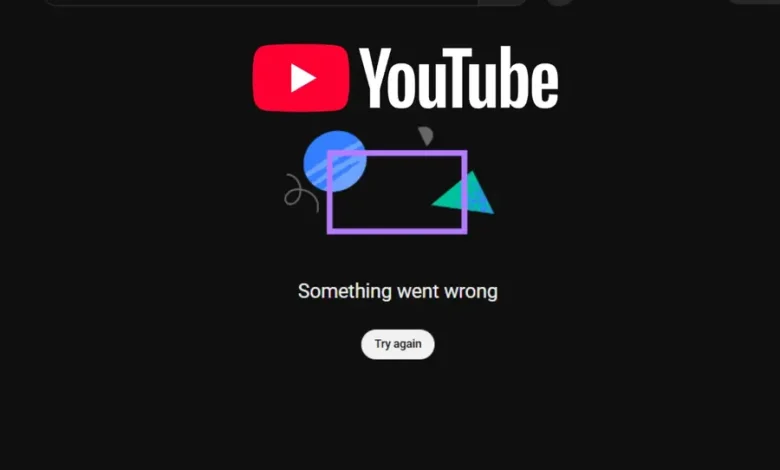

The disturbance began late Tuesday evening, February 17, 2026, around 8:00 PM EST, and persisted well into the early hours of Wednesday, February 18. Users attempting to access YouTube Main, YouTube Music, YouTube Kids, and the premium YouTube TV service encountered a startlingly broken interface. The sidebar menus, search bars, and account icons loaded correctly, but the central content feed—the algorithmic heart of the user experience—remained stubbornly empty. In some instances, users were greeted with a generic “Something went wrong” error message, while others saw infinite loading spinners that never resolved.

This was not a total network collapse but a specific functional paralysis. The ability to navigate the site’s skeleton without accessing its muscle (the videos) pointed immediately to a failure in the logic layer rather than the storage layer. As reports spiked on Downdetector, exceeding 320,000 in the US alone within the first hour, it became clear this was a systemic global failure. The timing was particularly disruptive, hitting prime-time viewing hours in North America and morning commute times in parts of Asia, causing a ripple effect across the digital economy.

Technical Analysis: When Algorithms Break the Interface

The root cause of the YouTube outage was officially identified by Google engineers as a critical failure in the recommendations system. In modern streaming architecture, the homepage is not a static list of files but a dynamically generated query result personalized for every single user request. This system relies on two main stages: candidate generation (selecting a few hundred videos from billions) and ranking (scoring those videos based on user probability of engagement).

During the outage, the recommendation engine likely failed to return a valid candidate set. When the front-end application requested the list of videos to populate the “Home” or “Up Next” feeds, the backend returned null or malformed data. Because the modern YouTube interface is entirely dependency-driven—meaning the UI cannot render a video card without the metadata provided by the recommendation service—the entire page appeared broken. This highlights a critical fragility in hyper-personalized web design: when the personalization algorithm fails, the core utility of the product evaporates.

The “Ghost Town” UI and Feed Logic Errors

Technically, this incident can be described as a “Ghost Town” UI glitch. The static assets (HTML/CSS frames) were delivered successfully via Google’s Content Delivery Network (CDN), proving that edge servers were operational. However, the API calls responsible for fetching video metadata (`/api/v1/feed/home` or similar internal endpoints) failed. This suggests that the failure occurred deep within the machine learning infrastructure, possibly introduced by a faulty model update or a corruption in the user history database that serves as the input features for the recommendation model.

Global Outage Statistics and Impact Data

The scale of the blackout was immense, affecting both consumer and enterprise tiers of the service. Below is a summary of the outage metrics observed across major regions during the peak disruption window.

| Region | Peak Reports (Downdetector) | Primary Symptoms | Recovery Duration |

|---|---|---|---|

| United States | 320,000+ | Blank Homepage, App Crash | ~2.5 Hours |

| United Kingdom | 100,000+ | Video Playback Failure | ~2 Hours |

| India | 200,000+ | Mobile App Back to top button |