OpenAI Sora: Complete Guide to the New Text-to-Video AI Model

OpenAI Sora has fundamentally redefined the boundaries of artificial intelligence, transitioning the world from static image generation to dynamic, photorealistic video creation. As of February 14, 2026, this text-to-video model stands as the apex of generative media, capable of simulating complex scenes with multiple characters, specific types of motion, and accurate details of the subject and background. While the initial announcement in 2024 stunned the technology sector, the rapid iterations and feature deployments leading up to early 2026 have solidified its role in professional workflows. This article provides an exhaustive analysis of OpenAI Sora, covering its latest February 2026 updates, technical underpinnings, pricing controversies, and its seismic impact on the creative economy.

OpenAI Sora: Comprehensive Overview

OpenAI Sora is a diffusion-based AI model designed to generate video content from textual instructions, static images, or existing videos. Unlike its predecessors that struggled with temporal consistency and physics, Sora demonstrates a remarkable understanding of how objects exist and interact in the physical world. It can generate videos up to a minute long (and recently extended lengths) while maintaining visual quality and adherence to the user’s prompt.

The model’s ability to interpret deep language cues allows it to create compelling characters that express vibrant emotions. Whether it is a complex camera pan through a cyberpunk city or a close-up of a woolen mammoth tramping through snow, Sora maintains coherence across frames, a challenge that plagued earlier generative video systems. For those tracking the pulse of the industry, verifying the latest developments via our post sitemap is essential to staying ahead.

The Evolution of Generative Video Technology

To understand the magnitude of OpenAI Sora, one must contextualize it within the broader history of generative AI. The journey began with simple Generative Adversarial Networks (GANs) that could barely produce blurry, low-resolution GIFs. The introduction of diffusion models, which power DALL-E 3 and Midjourney, revolutionized image generation. Sora effectively scales this diffusion transformer architecture to the temporal dimension.

Since its beta release, OpenAI has aggressively updated the model. Early versions were limited to 60-second clips with no audio. By early 2026, the integration of synchronized audio, improved frame rates, and higher resolutions (up to 1080p) has become standard. The shift from a research preview to a commercial product has been marked by significant milestones, including the introduction of ‘Director Mode’ for advanced camera controls and the controversial decision to restrict free tier access in January 2026.

Technical Architecture: How Sora Works

At its core, OpenAI Sora utilizes a diffusion transformer architecture. This hybrid approach combines the noise-removal capabilities of diffusion models with the scalability of transformers (the ‘T’ in GPT). Sora represents videos and images as collections of smaller units of data called “spacetime patches.”

Spacetime Patches

Just as Large Language Models (LLMs) process text tokens, Sora processes visual patches. These patches essentially flatten the video data into a sequence that the transformer can analyze. This method allows the model to train on a vast array of visual data, spanning different durations, resolutions, and aspect ratios. By treating video as patches, Sora can generate content for various devices, from widescreen cinematic formats to vertical smartphone screens, without cropping or distorting the composition.

3D Consistency and Object Permanence

One of Sora’s most significant technical achievements is its grasp of 3D geometry. The model does not merely animate 2D pixels; it simulates a 3D environment. This allows for consistent object permanence—if a character walks behind a tree, they re-emerge on the other side correctly. This capability suggests that the model has learned an implicit physics engine, enabling it to simulate gravity, collision, and texture interaction with uncanny accuracy.

Latest Updates: Extensions and Cameos (Feb 2026)

The first quarter of 2026 has been a whirlwind of feature drops for OpenAI Sora users. According to the latest release notes from the OpenAI Help Center, several game-changing features have gone live as of February.

Video Extensions (Released Feb 9, 2026)

The “Extensions” feature addresses one of the primary limitations of generative video: length. Users can now seamlessly continue any video draft. By selecting “Extend” and providing a new prompt, Sora generates the next sequence while preserving the characters, setting, and lighting of the original clip. This allows creators to build longer narrative arcs, stitching together multiple generations into a cohesive story.

Image-to-Video with People (Released Feb 4, 2026)

Perhaps the most requested and sensitive feature, the ability to animate static photos of people, is now live for eligible users. This feature enables users to upload photos of family or friends and bring them to life. However, OpenAI has implemented strict guardrails. Users must attest to having consent from the individuals featured. This update follows the success of similar features in image generation tools but comes with enhanced safety protocols to prevent deepfake misuse.

Character Cameos and Storyboards

Building on the “Character Cameos” feature introduced in late 2025, users can now save and reuse custom characters across different videos. This is crucial for brand consistency and storytelling. Additionally, the new Storyboard mode (beta) allows creators to sketch out scenes second-by-second, offering granular control over the pacing and composition before the final render.

Pricing Structures and Availability Changes

The transition from a research preview to a paid service has been a major talking point in 2026. As of January 10, 2026, OpenAI adjusted its pricing policy, effectively ending the free tier for video generation. Access to Sora is now exclusive to ChatGPT Plus and Pro subscribers.

- ChatGPT Plus ($20/month): Includes a limited monthly allowance of video generation credits, standard processing speed, and access to the basic editing tools.

- ChatGPT Pro ($200/month): Designed for power users and studios, this tier offers higher generation limits, priority processing, 1080p resolution upscaling, and early access to features like Storyboards.

This shift reflects the massive computational cost associated with video generation. Rendering high-definition video requires significantly more GPU power than text or image generation. For businesses tracking these costs, reviewing our category sitemap can help locate financial analyses of AI tool adoption.

Safety Measures and Red Teaming Protocols

With great power comes great responsibility, and OpenAI Sora’s realistic capabilities pose significant ethical risks. To mitigate the potential for misinformation and non-consensual content, OpenAI has deployed a multi-layered safety strategy.

C2PA and Watermarking

All videos generated by Sora contain C2PA (Coalition for Content Provenance and Authenticity) metadata. This invisible digital signature verifies that the content is AI-generated. Additionally, visual watermarks are embedded in the lower corner of videos, although sophisticated users often attempt to crop these out, making the C2PA metadata the robust line of defense.

Red Teaming

Before every major release, including the recent February 2026 updates, Sora undergoes extensive “red teaming.” OpenAI employs domain experts in misinformation, hate speech, and bias to try and break the model’s safeguards. These adversarial tests help refine the text classifiers that reject harmful prompts, such as requests for violent content, celebrity deepfakes (without authorization), or sexual material.

Comparison: Sora vs. Runway Gen-3 vs. Pika

While OpenAI Sora garners the headlines, the competitive landscape is fierce. Runway Gen-3 and Pika Labs have also made significant strides. The following table compares the current state of these leading models as of early 2026.

| Feature | OpenAI Sora | Runway Gen-3 Alpha | Pika 2.0 |

|---|---|---|---|

| Max Duration | 60s+ (via Extensions) | 40s (Extendable) | 30s |

| Resolution | Up to 1080p | Up to 4K | 1080p |

| Consistency | Excellent (3D Object Permanence) | High (Motion Brush control) | Good (Strong on animation) |

| Audio Generation | Native & Synchronized | External integration | Lip-sync & SFX |

| Pricing | Included in Plus/Pro ($20+) | Credit-based subscription | Freemium model |

| Special Features | Spacetime patches, Extensions | Motion Brush, Camera Control | Lip Sync, Modify Region |

Impact on Hollywood and Creative Industries

The release of OpenAI Sora has sent shockwaves through the entertainment industry. Filmmakers, advertisers, and game developers are simultaneously excited and apprehensive. For independent creators, Sora democratizes high-end visual effects that previously required million-dollar budgets. A single creator can now visualize a sci-fi blockbuster scene or a historical documentary segment in minutes.

However, the labor implications are profound. Concept artists, storyboarders, and stock footage videographers face an existential threat. Tyler Perry notably paused an $800 million studio expansion after witnessing Sora’s capabilities, citing the reduced need for physical sets and location shoots. The industry is currently in a period of adaptation, where “AI Director” is emerging as a legitimate job title, blending prompt engineering with traditional cinematic theory.

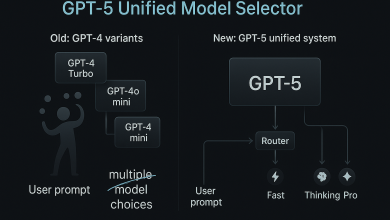

The Future Roadmap: Anticipating Sora 2.0

Looking ahead, the roadmap for Sora involves deeper integration with other modalities. Rumors and beta leaks suggest that the upcoming “Sora 2” (anticipated late 2026) will focus on even longer generation times, potentially handling full scenes with dialogue-heavy scripts. The integration of GPT-5 reasoning capabilities could allow users to provide a script and have Sora act as the director, determining camera angles and blocking automatically.

Another frontier is real-time generation. Currently, high-quality video generation takes minutes. Reducing this latency to near real-time could enable interactive experiences, such as AI-generated video games or responsive educational tutors. For those interested in the technical templates powering these future sites, our templates sitemap offers a glimpse into the backend structures supporting modern web content.

Conclusion: Navigating the Generative Video Era

OpenAI Sora represents a pivotal moment in the history of content creation. As of February 2026, it has matured from a viral curiosity into a professional tool with robust features like Extensions, C2PA authentication, and high-fidelity physics simulation. While challenges regarding copyright, safety, and employment remain, the technology’s trajectory is undeniable. For creators and businesses alike, mastering Sora is no longer optional—it is a requisite skill for the future of digital storytelling. For further reading on the latest AI tools, visit the official OpenAI Sora page.